Content Warning

Content Warning

Were I still using that POS Duolingo, this would have made me stop.

https://fortune.com/2025/05/20/duolingo-ai-teacher-schools-childcare/

Content Warning

#Technofeudalists and the perceived #AI threat incongruence

(2/n)

... importantly, there is, in my view, no discrepancy on a strategic (intent) level:

a) #Musk's primary goal is, as you know, to transform #humanity into a #MultiplanetarySpecies. Colonizing the closest possible habitable planet (#Mars, #Terraforming) is indispensable for this lifty aim. That will cost the global economy billions of dollars for many years, that will then be missing for feeding the poor...

Content Warning

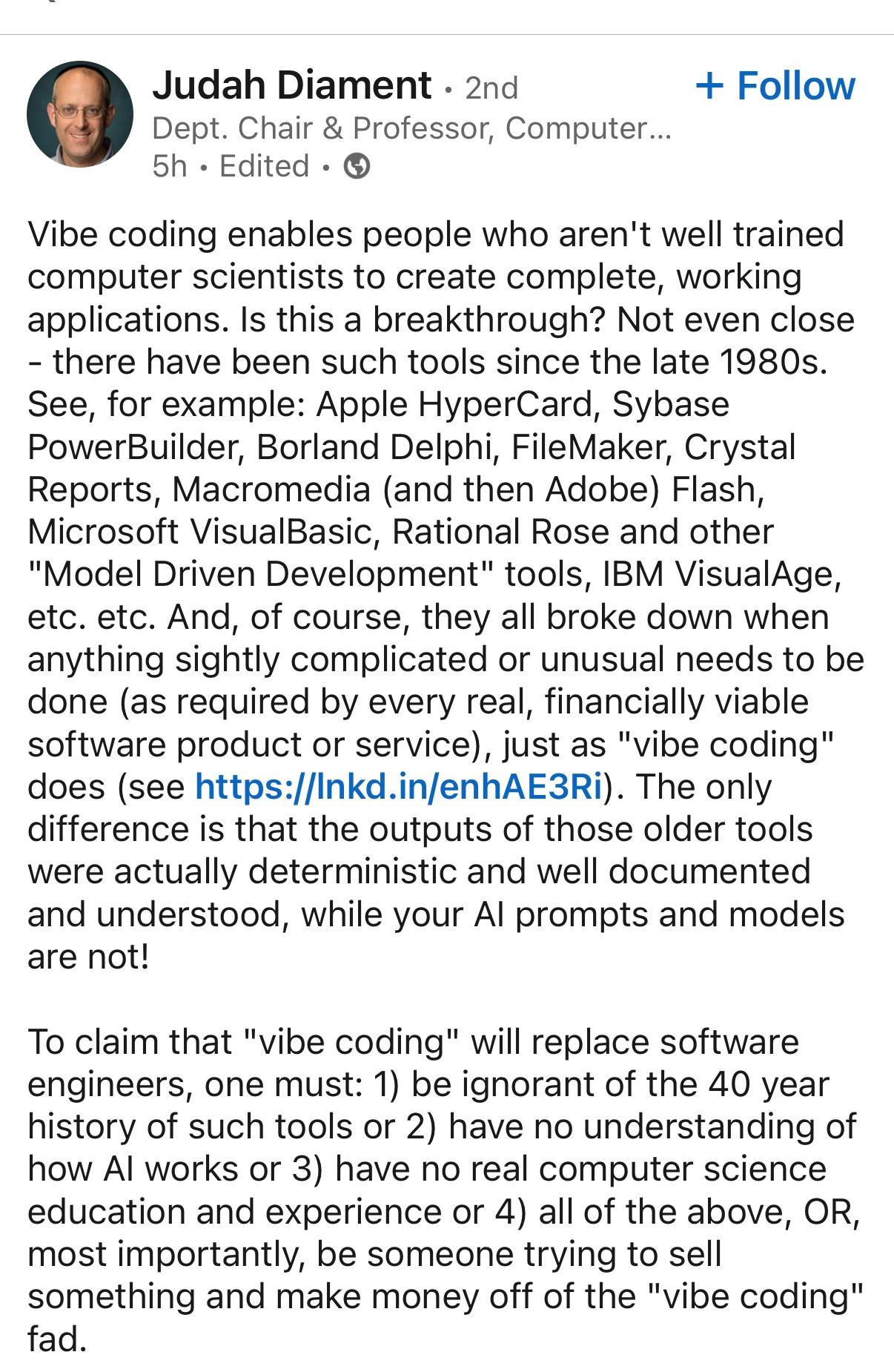

A computer scientist’s perspective on vibe coding:

Content Warning

Small government, free market Republicans, eh? This doesn't look like corruption at all, oh no.

"...no State or political subdivision thereof may enforce any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems"

Content Warning

They are convinced that there is a pot of gold at the end of the rainbow and they call it AI.

Content Warning

I put my phone in the box. He didn’t budge. I took off my smart watch and added it in.

“Are you sure you have no more computers? The detector sends out a brief EMP. It would be a shame to destroy any gadgets. Or injure you." He was staring at the side of my face.

Ah. I removed the Connex from my temple. I’d forgotten it was there.

He ushered me into what looked like an old electronic doorway, then pressed a button. A light flashed.

"You're free to enter. Enjoy." No smile.

I passed through a corridor to desk where a receptionist smiled. "First time?"

"Yes, is it obvious?"

"Don't worry. It's simple. Through the double doors there you'll find the main selection of books, by era and topic. It's colour-coded and easy to follow. You'll need these if you want to touch anything." She put a paper mask and thin laboratory gloves on the desk.

"Behind you is the iffy section, as we call it. Books printed after 2015."

"2015?! I thought AI printed books only appeared in the mid 2020s."

"That's probably true, but we can't be sure. Preserving authentic pre-AI knowledge is our raison d'être. We can't be too safe."

Her look turned serious and I saw the devotion to the cause in her eyes. Since the Big Corruption of '32, no digital files could be trusted to replicate original human knowledge. This library was a time capsule.

"Can books be taken out?"

"No, I'm afraid not. We couldn't let them back in, as they could be fakes."

"So, can I copy things? My phone and Connex were taken away. Do you have a camera to message me chapters?"

"No, we're strictly machine-free. but we have several scribes. They're very good." She was enjoying my puzzled look.

"They can copy down whole pages for you. With pen and paper," she answered my unspoken question.

"Pen and paper?" these were words of tales and myths.

"Come, I'll show."

#devotion #MastoPrompt #microfiction#AI#ArtificialIntelligence

Content Warning

Tasked with breaking the Enigma code, an AI system trained to recognise German using Grimm’s fairytales, utilizing 2,000 virtual servers, cracked a coded message in 13 minutes.

Let's pause for a second to let it sink.

And let's think for a second

Alan Turing “Bombes” could decipher two messages every minute.

😱. Suddenly the AI result isn't all that impressive any more.

AI cuts out all the research, knowledge gain, and insight. With all the resources available today, it still performs worse than a solution from 70 years ago (to be precise 26 times).

And this is seen as an impressive innovation 🤡🤯

"Sources":

Influencer post https://mastodon.social/@Caramba1/114470245795906227

Guardian article

https://www.theguardian.com/science/2025/may/07/todays-ai-can-crack-second-world-war-enigma-code-in-short-order-experts-say

Content Warning

🔗 Read here: https://tinyurl.com/mvvdemen

#Buddhism#AI#ArtificialIntelligence#OnlineEvents#Technology#Psychology#Spirituality#HongKong#BuddhistStudies

Content Warning

Content Warning

Content Warning

https://prospect.org/power/2025-03-25-bubble-trouble-ai-threat/

But I want to have an aside on the level to which people uncritically use the term "foundation models" and discuss "reasoning" of these models, when it is very likely that the models literally memorized all these benchmarks. It truly is like the story of the emperor's clothes. Everyone seems to be in on it and you're the crazy one being like but HE HAS NO CLOTHES. 🧵

Content Warning

There are *many* problems with #AI. The biggest one, the one that subsumes the rest, is that it's *expensive*

And it's not even *good* at anything useful.

They call it "hallucination" but it's really sparkling #Fail. Any human doing a job with that level of fail would have been fired long ago.

Content Warning

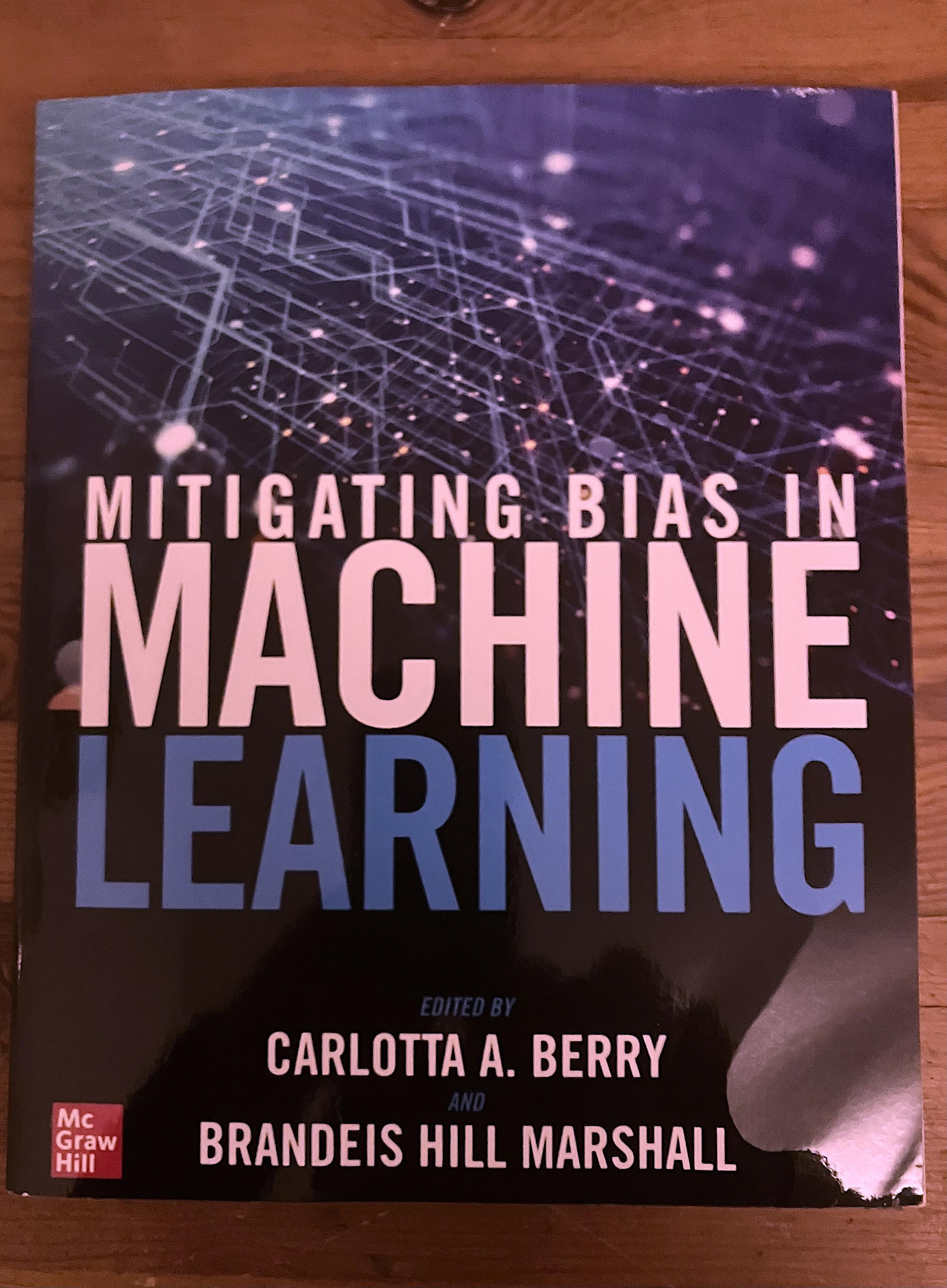

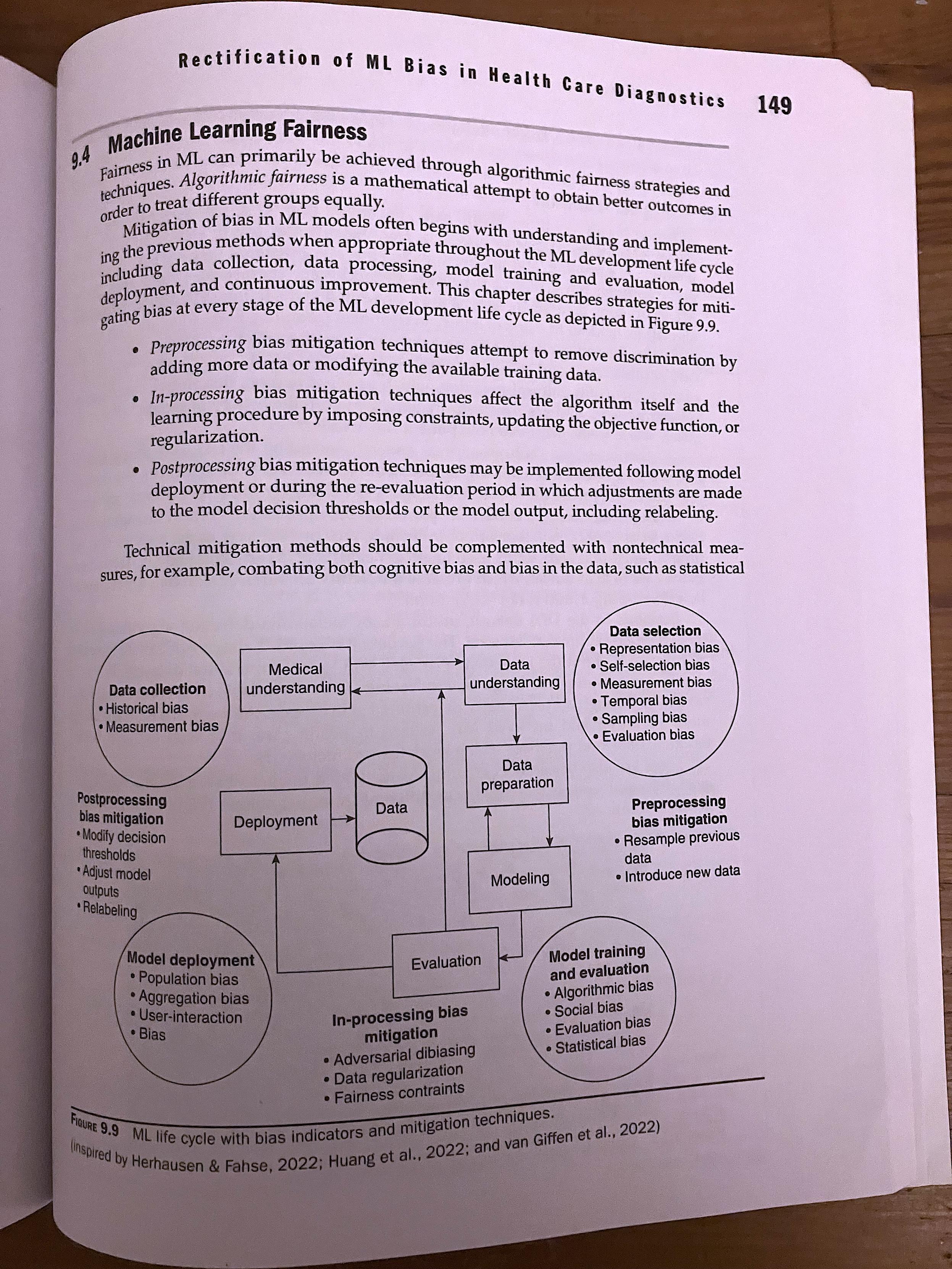

Mitigating Bias in Machine Learning

Edited By @drcaberry

Brandeis Hill Marshall

„We dedicate this work to the diverse voices in #AI who work tirelessly to call out bias and work to mitigate it and advocate for #EthicalAI every day.

Some of the trailblazers doing the work are

@ruha9

@timnitGebru

@cfiesler

Joy Buolamwini

@ruchowdh

@safiyanoble

We also dedicate this work to the future engineers, scientists, and sociologists who will use it to inspire them to join the charge.“

Content Warning

#Technofeudalists and the perceived #AI threat incongruence

(1/n)

I'm surprised that you as an #AI and #TESCREAL expert See a discrepancy in this. For the morbid and haughty minds of the #Longtermists line #Elon, there is no discrepancy, IMHO:

1) At least since Goebbels, fascists offen acuse others of what they have done or are about to do themselves; or they deflect, flood the zone, etc. That on a tactical/communications strategy level.

2) More...

Content Warning

Anti-AI tools

Glaze

https://glaze.cs.uchicago.edu

Glaze is a system designed to protect human artists by disrupting style mimicry. At a high level, Glaze works by understanding the AI models that are training on human art, and using machine learning algorithms, computing a set of minimal changes to artworks, such that it appears unchanged to human eyes, but appears to AI models like a dramatically different art style.

Nightshade

https://nightshade.cs.uchicago.edu/

Nightshade, a tool that turns any image into a data sample that is unsuitable for model training

HarmonyCloak

https://mosis.eecs.utk.edu/harmonycloak.html

HarmonyCloak is designed to protect musicians from the unauthorized exploitation of their work by generative AI models. At its core, HarmonyCloak functions by introducing imperceptible, error-minimizing noise into musical compositions.

Kudurru

https://kudurru.ai

Actively block AI scrapers from your website with Spawning's defense network

Nepenthes

https://zadzmo.org/code/nepenthes/

This is a tarpit intended to catch web crawlers. Specifically, it's targetting crawlers that scrape data for LLMs - but really, like the plants it is named after, it'll eat just about anything that finds it's way inside.

AI Labyrinth

https://blog.cloudflare.com/ai-labyrinth/

Today, we’re excited to announce AI Labyrinth, a new mitigation approach that uses AI-generated content to slow down, confuse, and waste the resources of AI Crawlers and other bots that don’t respect “no crawl” directives.

More tools, suggested by comments on this posts:

Anubis

https://xeiaso.net/blog/2025/anubis/

Anubis is a reverse proxy that requires browsers and bots to solve a proof-of-work challenge before they can access your site.

Iocaine

https://iocaine.madhouse-project.org

The goal of iocaine is to generate a stable, infinite maze of garbage.

Content Warning

Content Warning

From @pluralistichttps://pluralistic.net/2025/03/18/asbestos-in-the-walls/#government-by-spicy-autocomplete

Content Warning

It's a real pleasure to listen to such a rich conversation on such diverse topics.

I especially liked how the topic of how the #AI industry labels people and methods was addressed.

It's the same for me, I've ended up assuming I'm a #DataScientist when I'm actually a #mechanical #engineer with a #PhD in #statistics. But the industry has decided that what I am is something I haven't studied about.

https://www.techwontsave.us/episode/267_ai_hype_enters_its_geopolitics_era_w_timnit_gebru